- Lifetime Solutions

VPS SSD:

Lifetime Hosting:

- VPS Locations

- Managed Services

- Support

- WP Plugins

- Concept

The AGI Race: Four AI Frontiers Competing for Intelligence Supremacy in 2025

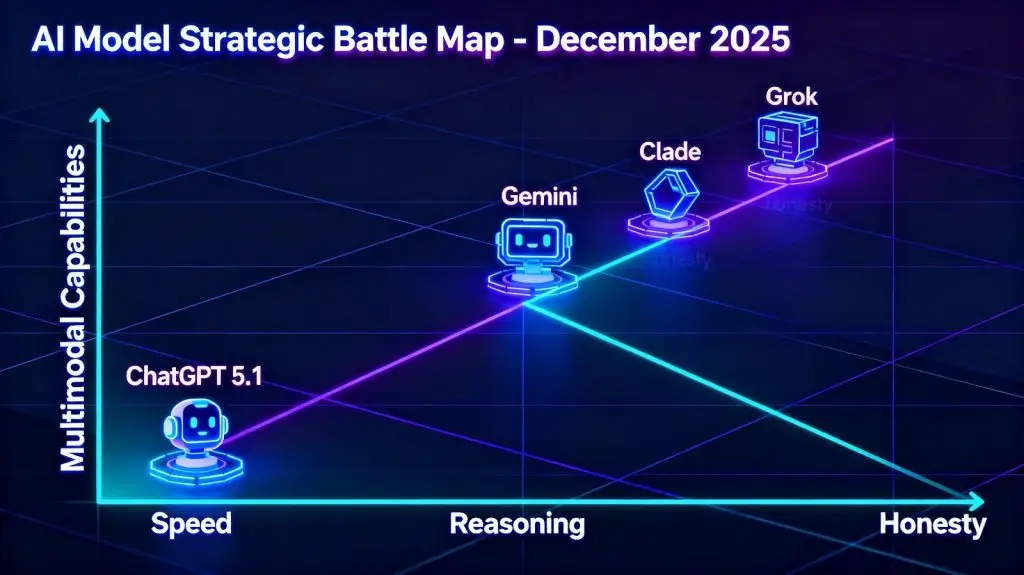

The artificial intelligence landscape has erupted into chaos. Within a single month, November and December 2025, the tech industry has witnessed four major frontier model releases that are redefining what’s possible with large language models. Yet amid this unprecedented acceleration, OpenAI ChatGPT 5.1 is facing unexpected headwinds, raising critical questions about whether aggressive scaling and rapid iteration can still deliver meaningful advances toward AGI. The answer is far more nuanced and troubling than most industry observers realize.

On November 12, 2025, OpenAI also made GPT-5.1 available to the world, and it is a smarter, more conversational sequel to GPT-5. The following day (November 14), however, xAI silently released Grok 4.1 Beta with its reasoning power, and it has an impressive 64.78% blind preference win rate compared to its predecessor during the user test. Only three days later, on November 17, Google and DeepMind released Gemini 3 Pro, their second-generation multimodal model, and then Anthropic announced Claude Opus 4.5, which was competitive in all categories of benchmarks.

This staggering release velocity demonstrates an industry-wide consensus: the old paradigm of annual model cycles is dead. What matters now is rapid iteration, incremental refinement, and the ruthless pursuit of specific capabilities rather than general-purpose improvements.

But here’s where it gets uncomfortable for OpenAI: GPT-5.1, despite its polish and personalization features, is struggling to differentiate itself in real-world deployments. When you examine the competitive landscape closely, the story becomes one of architectural pivot points rather than raw capability gains.

The Competitive Landscape Shift: ChatGPT 5.1 Under Pressure as Gemini, Claude, and Grok Advance

GPT-5.1 came with an inquisitive mind: it is more welcoming in a conversation and thinks in an adaptive manner that changes the thinking effort depending on the task difficulty. OpenAI added tone options (Friendly, Professional, Quirky, Candid) and enhanced customization, which is currently available on any chat in real time, a user experience balancing act that is attractive to hear but covers an underlying issue.

The fundamental model upgrades are insignificant. The performance of GPT-5.1 High on SWE-bench Verified (software engineering) is 76.3 percent compared to the previous performance of GPT-5. That is an improvement, but compared to what competitors were producing at the same time, the improvements seem more of a line than a revolution.

The critical weakness: While GPT-5.1 excels at conversational tasks and maintains reliable instruction-following, it demonstrates exactly what users complained about, partial answers, excessive brevity, over-filtering, and reluctance to engage with genuinely complex or controversial topics. The model exhibits what some researchers call “overthinking brevity”, spending computational resources deciding what not to say rather than providing comprehensive responses.

This represents a fundamental architectural choice. Unlike competitors who are pushing toward greater transparency and comprehensiveness, OpenAI has optimized for caution. The personalization theater masks the absence of substantive capability breakthroughs. When you examineGemini 3 Pro benchmarks versus GPT-5.1 in a comprehensive comparison, you see GPT-5.1 choosing polish over power, sacrificing genuine capability advancement for cosmetic improvements.

Google’s Gemini 3 Pro arrived with something OpenAI can’t easily match: a 1-million-token context window, equivalent to 1,500 pages of text or 30,000 lines of code. This isn’t an incremental improvement; it’s a categorical shift in what’s possible with long-context reasoning.

The benchmarks tell a compelling story. On MMMU-Pro (multimodal reasoning), Gemini 3 Pro achieved 81%. On Video-MMMU, it scored 87.6%. But more importantly, on the frontier of vision AI and multimodal understanding, Gemini 3 Pro represents a generational leap from simple recognition to true visual and spatial reasoning. The model features pixel-precise pointing capability, extracting 3D spatial relationships from 2D images, and can process video at 10 frames per second, 10x the default speed. This enables analyzing complex actions in real-time, like capturing golf swing mechanics with unprecedented precision.

The model can ingest entire video transcripts, full codebases, and massive document collections in a single prompt, a capability that fundamentally changes how AI can be deployed in enterprise contexts.

More critically, Gemini 3 Pro powers Google AI Studio, a revolutionary platform that enables non-technical entrepreneurs to generate fully functional React web applications directly from natural language descriptions. Early testing shows developers can build complete web apps in under 60 seconds, with features that previously required 60-80 iterative prompts with earlier models now often succeeding in single attempts.

On factuality,Gemini 3 Pro achieves a 68.8% score on the FACTS Benchmark versus GPT-5’s 61.8%, a substantial gap in real-world reliability. Even Gemini 2.5 Pro scores higher than GPT-5, suggesting that architectural choices around multimodal grounding and video understanding translate directly into better factual accuracy.

TheLMArena Text leaderboard rankings have tightened dramatically, Gemini 2.5 Pro at 1457, GPT-5 at 1455, and Claude Opus 4.1 at 1451. The separation is statistical noise, but the trajectory matters: Gemini is ascending while GPT-5.1 is consolidating.

xAI’s Grok 4.1 emerged from a two-week silent rollout where users unknowingly compared the new model against the previous version. The blind preference data is stunning: 64.78% of users preferred Grok 4.1 in direct comparisons, a margin that far exceeds typical incremental release improvements.

What’s driving Grok’s ascent? Emotional intelligence, hallucination reduction, and no artificial limitations on response scope.Grok 4.1 innovative features include end-to-end encrypted chat, improved conversation fluidity with 91% context retention, far exceeding Grok 4.0’s 68%, and a 4.22% hallucination rate, a 65% reduction from its predecessor. Unlike ChatGPT 5.1’s cautious approach, Grok 4.1 answers difficult questions directly, doesn’t shy away from controversial topics, and maintains a conversational style that feels natural rather than templated.

The model includes “Big Brain” mode for complex problem-solving and integrates real-time information via X platform data. First-token latency dropped 33% to 1.2 seconds, and 500-word generation decreased from 16 seconds to 12 seconds. For users prioritizing honest, comprehensive answers over corporate risk mitigation, Grok has become the default choice.

Critically, on pricing, Grok 4 Fast costs just $0.20-$0.50 per million tokens, making it accessible for cost-conscious developers and startups, while still delivering impressive coding capabilities and image generation at production-grade quality. This aggressive pricing strategy is forcing competitors to reconsider their own cost structures.

Anthropic’s Claude Opus 4.5 arrived quietly but with formidable credentials. The model excels at complex reasoning, architectural decision-making, and situations requiring genuine multi-step analysis.Users report that Claude demonstrates superior reasoning transparency, the model shows its work, acknowledges uncertainty, and provides nuanced perspectives where ChatGPT 5.1 would offer sanitized summaries.

The agentic capabilities are particularly noteworthy. Claude Opus 4.5 can match Sonnet’s best performance on challenging benchmarks like SWE-bench Verified while using significantly fewer output tokens. At maximum effort, it surpasses Sonnet’s scores while still being more token-efficient. With context compaction, advanced tool use, context management, and long-term memory, the platform enables Opus 4.5 to run longer, coordinate subagents, and tackle deep research tasks with notable gains in accuracy.

More significantly, Anthropic announced thedonation of the Model Context Protocol (MCP) to the Linux Foundation, establishing the Agentic AI Foundation in partnership with Block, OpenAI, Google, Microsoft, AWS, Cloudflare, and Bloomberg. This represents a strategic pivot toward open standards and ecosystem governance, positioning Claude not as a singular product but as part of a larger agentic infrastructure movement.

MCP has achieved remarkable adoption: 10,000+ active public servers, integration across ChatGPT, Gemini, Cursor, VS Code, and Microsoft Copilot. By donating stewardship to the Linux Foundation rather than maintaining proprietary control, Anthropic signaled that the future belongs to whoever can build the most reliable agent infrastructure, not necessarily the singular “smartest” model.

Here’s the uncomfortable reality emerging from December 2025’s benchmark chaos: we’re hitting diminishing returns on pure model scaling. The December 2025 LMArena leaderboards show the top models separated by as little as 10 ELO points, statistical noise at this level.

Research from HEC Paris and AI researchers broadly is surfacing a pattern that challenges industry orthodoxy: pre-training scaling laws appear to be flattening. More data, more parameters, and more compute are still producing improvements, but at declining marginal returns. GPT-5 was supposed to represent a generational leap; instead, GPT-5.1 feels like a point release.

Senior industry leaders back this observation. TechCrunch’s analysis of current AI scaling trends reported that current AI scaling laws are showing diminishing returns, with Marc Andreessen noting that AI models currently seem to be converging at the same ceiling on capabilities. Microsoft CEO Satya Nadella pointed to test-time compute research as the next scaling frontier. At the same time, Anyscale’s founder, Robert Nishihara, emphasized that “in order to keep the scaling laws going, we also need new ideas”.

This has profound implications for the path to AGI. If traditional scaling is hitting limits, progress must come from:

Test-time compute scaling (models “thinking” longer at inference rather than during training), pioneered by OpenAI’s o1 and now adopted across competing models

Post-training optimization (reinforcement learning, synthetic data, constitutional AI), where Anthropic and DeepMind are showing particular strength

Multimodal depth and reasoning (deeper video understanding, code repository analysis, long-context synthesis), Gemini’s explicit focus

Agentic architecture (MCP integration, tool orchestration, multi-step planning), the industry consensus emerging around what comes next

ChatGPT 5.1 represents the old paradigm, throwing more compute at scale, refining instruction-following, and adding personalization layers. It’s masterfully executed, but it’s not pushing frontiers. It’s optimizing within boundaries rather than breaking them.

OpenAI declared “code red” following the Gemini 3 launch, according to multiple sources, including The Guardian and Reuters. CEO Sam Altman told staff it was a “critical time” for ChatGPT as it faces intense competition from Google’s new model. Tom Warren from The Verge reported that OpenAI is rushing GPT-5.2 for early December as an urgent competitive response.

The reporting revealed a timeline of xAI releasing GPT-5.2, which will be released in mid-December, with rumors that it would be released as early as December 9-11. This frenzied rush shows one important thing: the OpenAI executives are aware that GPT-5.1 is no longer winning on the dimensions that count at this point.

Code red mentality is not the anxiety; it is the realistic evaluation of a market in which Gemini 3 Pro, Claude Opus 4.5, and Grok 4.1 are all lifting the bar higher than can be sustained by incremental updates. When you have to issue a new release within a few weeks of a big launch, it is an indication that your competitive moat is being washed away faster than anticipated.

Your initial assessment that “ChatGPT 5.1 doesn’t collaborate” and “returns partial answers” isn’t user error; it reflects deliberate architectural choices. OpenAI has prioritized:

Safety constraints over comprehensiveness, the model optimizes for refusing ambiguous requests rather than attempting nuanced analysis.

Brevity defaults over depth; the model defaults to concise responses, requiring users to explicitly prompt for detail (the opposite of sophisticated AI behavior)

Filtered truth over uncomfortable honesty, controversial topics, complex tradeoffs, and morally ambiguous questions receive sanitized treatments.

Personalization theater over substantial capability improvements, tone presets are UX refinements, not capability breakthroughs.

Grok’s rise reflects user fatigue with this approach. When xAI offers honest, comprehensive answers without false politeness, users respond enthusiastically. Gemini’s strength lies in multimodal depth and genuine reasoning rather than conversational styling. Claude excels through transparency and intellectual honesty.

ChatGPT 5.1’s genuine strengths lie in developer APIs and coding assistance, not general-purpose intelligence.

The December 2025 landscape reveals something more fundamental than model competition: the industry is shifting from competing on singular models to competing on agentic infrastructure.Anthropic’s strategic decision to donate MCP represents a critical acknowledgment that the bottleneck isn’t model intelligence, it’s system architecture.

Companies need:

On these dimensions:

Complete LLM API pricing comparison data from December 2025 reveals a three-tier market emerging: premium models at $10–75 per million output tokens (GPT-5, Claude Opus), mid-tier at $3–$15 (Gemini Pro, Claude Sonnet, Grok), and low-end at $0.4–$4 (nano/flash variants).

What’s striking is how aggressively Grok is pricing. At just $0.20 per million input tokens for Grok 4 Fast, xAI is undercutting OpenAI’s $1.25 pricing by over 6x while maintaining competitive capabilities. Gemini 3 Pro enters at $2.00 for ≤200K context and $4.00 for >200K context. Claude Opus 4.5 premium pricing sits at $5.00 input and $25.00 output, positioning it as the premium reasoning option for enterprises requiring maximum intelligence.

This pricing pressure explains OpenAI’s “code red” status more clearly than any capability gap. If developers and enterprises can get 70% of ChatGPT’s functionality at 40% of the cost with Grok, or better multimodal capabilities with Gemini 3 Pro, the financial incentive to migrate accelerates dramatically.

Elon Musk announced he would delay Grok 5 to 2026, claiming it might achieve AGI with a 10% probability. However, Musk’s strategy remains classic scale maximalism: bigger models, bigger compute clusters, bigger training runs. This worked spectacularly well from GPT-2 to GPT-4, but it’s worked less spectacularly well since then.

The traditional wisdom that AGI may emerge by 2026-2027 must be highly qualified. The scaling plateau is not a permanent ceiling, although it does imply that:

Quantitative improvements alone won’t deliver AGI; we need qualitative breakthroughs in reasoning architecture, world modeling, or agent coordination

It is not about individual prototypes in the race anymore, but about the proponent of the most workable logic pipelines, the most stable orchestration of tools and the most multimodal insight.

Open standards matter; the MCP adoption across competing models suggests the industry recognizes that proprietary lock-in creates fragmentation, not advantage.

Test-time scaling is the new frontier, where models “think” rather than simply “retrieve,” computation happens at inference rather than training.g

The path to AGI likely involves:

None of these maps to ChatGPT 5.1 architectural emphasis on personalization and conversational warmth.

For teams building applications in December 2025, the decision matrix has shifted dramatically:

Choose GPT-5.1 if: It is not about individual prototypes in the race anymore, but about the proponent of the most workable logic pipelines, the most stable orchestration of tools and the most multimodal insight.

Choose Gemini 3 Pro if: You’re processing large documents, video, complex multimodal content, or building agents that need massive context windows; you want to avoid cost explosion on long-context queries.

Choose Claude Opus 4.5 if: You require the highest quality reasoning disclosure, security-sensitive applications, agentic workflows in which accountability is necessary, or investigative work that is research-intensive and requires a discriminating analysis.

Choose Grok 4.1 if: You want honest, unfiltered responses; you prioritize cost efficiency; you need real-time information access; you value conversational naturalness over corporate caution.

The business case for ChatGPT 5.1 has substantially narrowed. It’s no longer the default choice for most use cases.

OpenAI built a tremendous product for November 2024’s competitive landscape, conversational superiority, instruction-following reliability, and developer API smoothness. By December 2025, the competition will have moved to different dimensions: multimodal reasoning, agentic orchestration, long-context synthesis, honest communication, and infrastructure standardization.

ChatGPT 5.1’s “code red” status isn’t because the model is broken; it’s because strategic requirements have shifted faster than architectural choices can accommodate. Personalization presets and warmer tone are refinements, not revolutions. Adaptive reasoning is engineering polish, not breakthrough capability.

The companies winning in December 2025 are those who:

What OpenAI chose to optimize for, conversational warmth and personalization, matters less than what competitors are building toward: infrastructure, reasoning depth, and honest engagement.

The AGI race isn’t being won by whoever releases the “smartest” model anymore. It’s being won by whoever builds the most capable reasoning systems, the most reliable tool orchestration, and the deepest integration with the data and tools that actually matter. On those dimensions, ChatGPT 5.1 is playing defense rather than offense.

Netanel Siboni is a technology leader specializing in AI, cloud, and virtualization. As the founder of Voxfor, he has guided hundreds of projects in hosting, SaaS, and e-commerce with proven results. Connect with Netanel Siboni on LinkedIn to learn more or collaborate on future projects.