- Lifetime Solutions

VPS SSD:

Lifetime Hosting:

- VPS Locations

- Managed Services

- Support

- WP Plugins

- Concept

How AI can transform your operations, how to measure success, and the do’s and don’ts on the road to a smart, reliable system

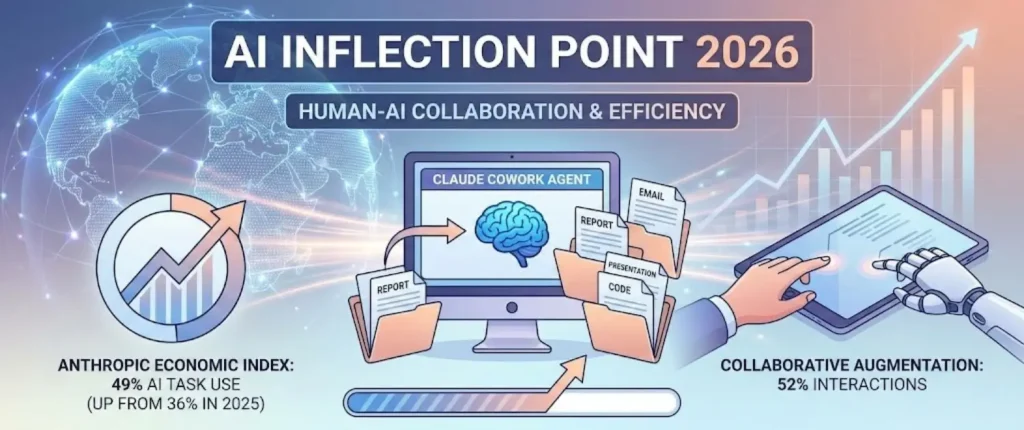

We are at a profound inflection point. In January 2026, Anthropic launched Claude Cowork an autonomous desktop agent that reads, edits, organizes, and creates files on your computer without hand-holding. Weeks later, the Anthropic Economic Index showed that 49% of occupations now use AI for at least a quarter of their tasks up from 36% when the Index launched in February 2025. College-level tasks see up to 12× speedup, and 52% of all Claude.ai consumer interactions are now collaborative augmentation rather than pure automation.

Meanwhile, the Model Context Protocol (MCP) the open standard Anthropic introduced in November 2024 has been adopted by OpenAI, Google DeepMind, and Microsoft, accumulating 97 million monthly SDK downloads and 10,000+ active servers by year-end 2025.

The implication: anyone can now learn any profession and accomplish virtually anything in the digital world. Anthropic internal research confirms that 27% of AI-assisted work consists of tasks that simply wouldn’t have been done otherwise and engineers report becoming genuinely “full-stack,” building polished UIs, data pipelines, and interactive dashboards outside their core expertise.

The COO role is shifting from reactive execution to predictive orchestration. According to the COO Forum 2025 report, 6 in 10 COOs lead AI initiatives yet only 1 in 5 feel prepared, and 88% focus narrowly on efficiency rather than innovation.

Anthropic landmark internal study (132 engineers, 53 interviews, 200K Claude Code transcripts) found:

| Metric | Before | Now | Change |

| AI usage in daily work | 28% | 59% | +2.1× |

| Self-reported productivity boost | +20% | +50% | +2.5× |

| Merged PRs per engineer/day | Baseline | +67% | +67% |

| Consecutive AI actions without human input | 9.8 | 21.2 | +116% |

Engineers report that AI “dramatically decreased the energy required to start tackling a problem”.

A mid-sized logistics company processes ~3,000 supplier invoices per month. Before AI, a three-person team spent 5 days per cycle manually extracting data from PDFs, cross-referencing PO numbers, and flagging discrepancies.

After deploying an AI workflow layer (Claude + MCP integrations to their ERP and cloud storage): invoice extraction is automated, discrepancies are flagged in real time, and human review is needed only for edge cases.

| KPI | Before | After |

| Processing time | 5 days | 1 day |

| Error rate | 4.2% | 0.8% |

| FTE required | 3 | 1 (review only) |

| Monthly cost | ~$15K | ~$4K (incl. AI tooling) |

This is a Phase 2 implementation workflow automation with human-in-the-loop for exceptions.

Claude Cowork transforms Claude from a chat interface into a desktop agent that independently works within folders you designate described by Anthropic as “less like a back-and-forth and more like leaving messages for a coworker”.

After launching Claude Code, Anthropic noticed developers were forcing the coding tool to do non-coding work vacation research, slide decks, email cleanup. VentureBeat reported that Cowork was built in ~10 days, largely using Claude Code itself.

Available now as research preview for Claude Max ($100–$200/month), macOS Apple Silicon.

The Model Context Protocol is an open standard a protocol specification built on JSON-RPC 2.0 that standardizes how AI connects to external data, tools, and services. Anthropic also maintains an open-source repository of reference server implementations, but MCP itself is best understood as a protocol spec analogous to HTTP or LSP not a software product.

Think of it as USB-C for AI: instead of M×N custom integrations, MCP collapses complexity into M+N.

| Date | Milestone |

| Nov 2024 | Anthropic releases MCP as open standard |

| Mar 2025 | OpenAI adopts MCP; Sam Altman: “People love MCP” |

| Apr 2025 | Google DeepMind adds MCP support to Gemini |

| Dec 2025 | Anthropic donates MCP to AAIF under Linux Foundation; OpenAI and Block co-found |

By end 2025: 97M monthly SDK downloads, 10K+ servers, support in Claude, ChatGPT, Cursor, Gemini, Copilot, VS Code.

Together they create an AI that can read local files (Cowork) → pull CRM data (MCP) → query databases (MCP) → generate reports (Cowork) → upload to Drive (MCP) → post summaries to Slack (MCP).

Anthropic research shows AI is dissolving professional barriers:

The ITU AI Skills Coalition (40+ partners, 60+ training programs) is working to scale this globally. Gartner reports that 77% of employees participate in AI training when offered yet only 42% can identify situations where AI can meaningfully help them.

A B2B SaaS company’s marketing lead needed to identify which content drove pipeline historically a 2-week request to the BI team, often deprioritized.

Using Claude + MCP connectors to HubSpot and Google Analytics, she ran her own attribution analysis in an afternoon: exported campaign data via MCP, asked Claude to correlate content engagement with deal progression, and produced a slide deck with Cowork.

| KPI | Before | After |

| Time to insight | 2–3 weeks (BI queue) | 4 hours |

| Frequency | Quarterly (if lucky) | On-demand |

| Actionability | Static report | Interactive, self-serve |

This is exactly the “activation energy” reduction Anthropic describes processes that once took “a couple weeks” become “a couple hour working session”.

Measuring AI value remains notoriously hard. The MIT NANDA study “The GenAI Divide” based on 150 leader interviews, 350 employee surveys, and 300 public AI deployments found that only about 5% of enterprise GenAI pilots reach production with measurable P&L impact. Separately, Gartner predicted that at least 30% of GenAI projects would be abandoned after POC by end of 2025. Purchased vendor solutions succeed roughly 67% of the time, while internal builds succeed only one-third as often, according to the MIT data.

| Tier | Timeframe | What to Measure |

| Action Counts | Week 1–30 days | Active users, adoption rate, training completion, integrations live |

| Workflow Efficiency | 30–90 days | Time saved per task, error rate reduction, user satisfaction, engagement depth |

| Revenue Impact | 6–12+ months | Cost reductions, revenue uplift, risk mitigation, market share |

Research from Sparkco shows enterprises focusing on 3–5 concise KPIs see a 20% increase in decision-making quality. The Anthropic Economic Index adds a critical nuance: when you factor in AI success rates on complex tasks, projected productivity gains may be substantially more modest than headline adoption numbers suggest.

Full governance frameworks (ISO 42001, EU AI Act compliance, NIST AI RMF) can take 6–12 months to implement. Most organizations can’t wait that long. A Minimum Viable Governance (MVG) approach lets you start safely and scale rigor as AI usage matures.

| Element | What It Means | Example |

| Owner | Every AI deployment has a named accountable person | Head of Ops owns the invoice-processing agent |

| Reviewer | A human verifies outputs before they reach customers or systems of record | Senior analyst spot-checks 10% of AI-processed invoices weekly |

| Risk triage | Red / Yellow / Green classification for all AI use cases | 🟢 Internal productivity (fast-track) · 🟡 Customer-facing + sensitive data (human-in-loop required) · 🔴 High-stakes decisions (full governance required) |

| Audit cadence | Regular review of AI accuracy, drift, and security | Monthly accuracy review; quarterly security audit; annual full governance review |

| SLA for updates | Defined response time when AI behavior degrades | P1 (customer impact): 4-hour response · P2 (internal): 48-hour response · Model/MCP updates: tested in staging ≥72 hours before production |

| Incident log | Track AI errors, hallucinations, unexpected behavior | Every “AI weirdness” logged → becomes foundation for full governance system |

The principle: “Safe enough to start” is a valid strategy build the bridge first, reinforce the structure as you grow.

Even promising AI deployments fail when organizations underestimate the non-technical challenges. Four patterns dominate:

1. Data Quality 45% of leaders cite data accuracy as their top deployment barrier; 42% lack sufficient data to customize models. Amazon’s abandoned hiring algorithm systematically discriminating against female candidates remains the most-cited cautionary tale.

2. Access Control Early Cowork testers saw unexpected file consumption when granting broad directory access. On the MCP side, researchers documented “toxic agent flows“ where combining tools creates unintended data exfiltration paths.

3. Prompt Injection As MCP connects agents to databases and APIs, malicious content in data sources can hijack agent behavior. The spec says there “SHOULD always be a human in the loop” treat that as MUST.

4. Change Management The RAND Corporation finds projects collapse most often because executives misunderstand the problem AI should solve. Meanwhile, Anthropic discovered a “paradox of supervision”: AI erodes the very skills needed to supervise it.

1. Agentic AI hits mainstream enterprise and half will stall.

Gartner predicts over 40% of agentic AI projects will be canceled by end of 2027 due to escalating costs, unclear value, or inadequate risk controls while simultaneously forecasting that 15% of day-to-day work decisions will be made autonomously by AI agents. The winners will be organizations that match agent capabilities to well-defined, high-ROI workflows rather than chasing the hype.

2. The integration moat replaces the model moat.

AI models are increasingly commoditized. The durable competitive advantage lies in connecting AI to your specific data, workflows, and institutional knowledge which is exactly what MCP enables. The donation to the Linux Foundation signals that MCP is becoming permanent infrastructure, not a vendor play.

3. Success rate becomes the metric that matters.

The Anthropic Economic Index reveals that Claude achieves ~50% success on tasks requiring 3.5 hours of work strong, but far from perfect. When you adjust for error rates, projected productivity gains drop significantly. The organizations that win will be those measuring successful task completion, not just adoption.

As one Anthropic engineer put it: “Nobody knows what’s going to happen. The important thing is to just be really adaptable”.

The tools are here. The protocols are standardized. The only question is: are you leading the transformation, or waiting for it to happen to you?

Netanel Siboni is a technology leader specializing in AI, cloud, and virtualization. As the founder of Voxfor, he has guided hundreds of projects in hosting, SaaS, and e-commerce with proven results. Connect with Netanel Siboni on LinkedIn to learn more or collaborate on future project.