- Lifetime Solutions

VPS SSD:

Lifetime Hosting:

- VPS Locations

- Managed Services

- Support

- WP Plugins

- Concept

A fake video that a coup in France was in progress was recently going viral on social media, leaving many people around the world thinking that real political turmoil was about to occur against the French President Emmanuel Macron. The video went viral extremely quickly, racking up over13 million views, with an African leader contacting Macron directly, asking what was happening. Even Macron himself could not immediately secure the video’s removal from Meta.

The deepfake featured a fake news anchor claiming that a colonel had seized power and Macron had been ousted, with helicopters, armed soldiers, and the Eiffel Tower visible in the background. The video was created in minutes using OpenAI Sora 2 video generation technology by a teenager in Burkina Faso. This incident arrives as Google announces a significant new capability: the ability to detect videos created using Google AI.

The timing is not coincidental. Deepfake videos increased 550 percent between 2019 and 2024, and false videos now spread rapidly across social media, influencing public perception of real-world events. Users can now simply upload a video to Gemini and ask, “Was this generated using Google AI?” The app will scan both audio and visuals and return detailed context specifying which segments contain AI-generated elements.

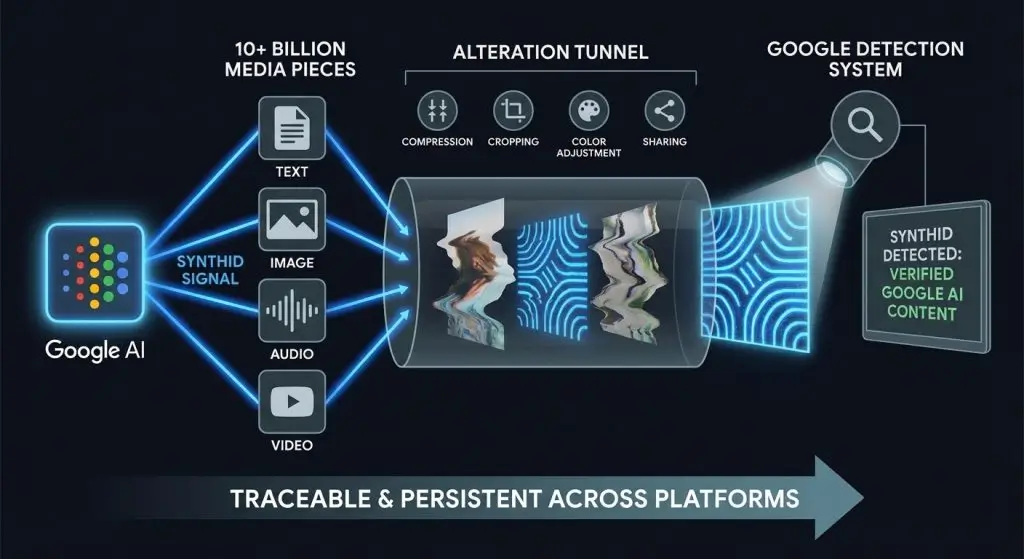

The verification relies on SynthID, Google proprietary digital watermarking technology, which embeds imperceptible signals into all AI-generated content.Over 10 billion pieces of media have already been watermarked this way, making it one of the most extensive deployments globally.

SynthID was first introduced in 2023 and has been refined to apply watermarks to text, images, audio, and videos. The watermark is designed to be imperceptible to humans but easily detectable by Google’s systems. Critically, the watermark endures basic digital alterations; simple modifications do not eliminate it, and it remains traceable even after being shared online or through messaging platforms.

This is a major strength over the old methods of watermarking since they can be easily removed by mere compression, cutting or contrast manipulation of images. Google says that SynthID is resistant to such typical manipulations, but it is not clear what to do with advanced adversarial attacks that explicitly strive to delete the watermarks.

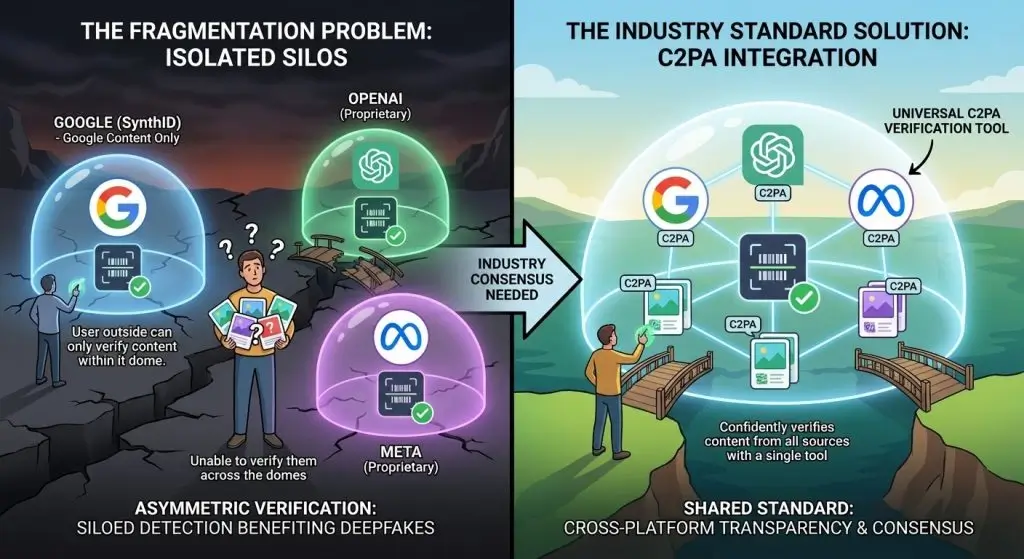

The greatest drawback is inherent: the tool identifies only Google AI. It is not able to find the videos created by OpenAI Sora, the Meta Generative AI Video model, or Copilot Studio by Microsoft, as well as any other third-party video-generating service. This inherent limitation greatly restricts the practical use of the tool in identifying most of the AI-generated content that is being shared over the internet.

The technical explanation is straightforward: SynthID watermarks are embedded only in content produced or altered by Google’s AI tools, meaning Gemini cannot identify whether uploaded content was generated using other AI platforms. This creates a “siloed” detection ecosystem where Google can only verify its own output.

Importantly, the French coup deepfake was produced with the help of OpenAI Sora 2, and not Google-based tools, meaning that Gemini would have been unable to recognize it in any way.

The feature supports 100 MB files and 90 seconds in length. Both image and video verification are now available globally across languages.

It is simply done: a customer opens the Gemini application and uploads the video that they want to verify, and requests it to tell them whether it was made or edited with the help of AI. Gemini processes visuals and audio streams independently to give a response that shows whether either or both of them have SynthID watermarks.

Meta initially refused to remove the French coup video, stating it did not violate its “rules of use.”Macron directly criticized Meta, stating the company showed “no concern for fostering healthy public discourse” and was “mocking democratic sovereignty.”

The incident revealed the power asymmetry between nation-states and tech platforms. Macron stated that while he believed he had more influence than ordinary citizens, “it appears to be ineffective,” highlighting how even sitting presidents struggle to secure the removal of false content from major platforms.

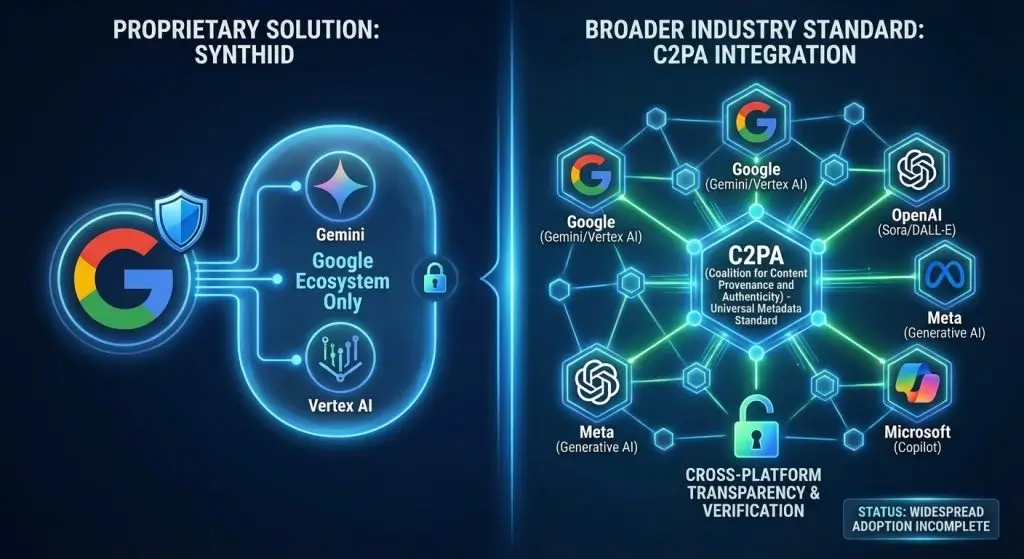

While SynthID represents Google proprietary solution, the company is simultaneously advancing a broader industry standard: the Coalition for Content Provenance and Authenticity (C2PA), a universal standard that aims to tag AI-generated content across all platforms. Google announced that images from Gemini and Vertex AI will now carry C2PA metadata, offering transparency beyond Google’s ecosystem.

The C2PA approach represents an attempt to establish a shared standard where competitors, OpenAI, Meta, and Microsoft, can embed compatible metadata that any verification tool can read. However,widespread adoption of C2PA remains incomplete, as many AI tools have yet to implement the standard.

The fundamental challenge facing AI content detection is fragmentation. SynthID detection works only for Google content, while similar proprietary systems from OpenAI, Meta, and others operate in isolation. This discontinuous landscape poses an asymmetric verification problem: a user is able to verify information produced by Google tools but not their competitors. This is favorable to the individuals developing deepfakes on non-Google systems.

Without broader industry adoption of C2PA, the detection landscape will remain siloed and inadequate. The solution to this involves competitors making a commitment to embedding compatible metadata in their AI-generated content, which is not yet a consensus in the industry.

Google Gemini video verification capability is a significant step in the transparency of the content, and it is a tool, not an end-to-end solution. As a user, it gives one a way of understanding whether a certain video was made with the help of Google AI systems or not. To society, it underscores the deficiency of proprietary, siloed mechanisms of detection in the face of a deepfake crisis that is sweeping the whole internet.

The French coup deepfake demonstrates the practical limitations of Google approach: the video was created with OpenAI’s Sora 2, meaning Gemini’s verification would have been completely incapable of detecting it. Whether the Gemini verification tool helps to increase the wider implementation of industry standards such as C2PA and make all AI companies add verifiable metadata to their outputs will be the actual test of the tool. In the absence of such an industry-wide commitment, verification tools will only be a niche product with a limited user base, and the masses of social media consumers will still keep sharing unverified content.

Netanel Siboni is a technology leader specializing in AI, cloud, and virtualization. As the founder of Voxfor, he has guided hundreds of projects in hosting, SaaS, and e-commerce with proven results. Connect with Netanel Siboni on LinkedIn to learn more or collaborate on future project.