- Lifetime Solutions

VPS SSD:

Lifetime Hosting:

- VPS Locations

- Managed Services

- Support

- WP Plugins

- Concept

Large Language Models (LLMs) such as GPT-4 and initial versions of Claude acted as oracles, passive databases of intelligence that received a query by a user, computed it, and text was returned. This model of interaction was revolutionary, but it was limited essentially by the fact that it was isolatedfromf the real working environment of the user. The model was able to type an email, but it was not able to send it. It may write Python code to index files, but it cannot run the code on the user’s desktop. The final mile of productivity, the real performance of work, was still a manual human process.

In January 2026, this paradigm shifted decisively with the release of Claude Co-work.

This report provides an exhaustive analysis of the Claude Co-work ecosystem, a desktop-native agentic framework released by Anthropic. Drawing on technical documentation, early adopter transcripts, and verified feature breakdowns from the weeks following its January 12, 2026, launch, we examine the system’s architecture, its nine core functional pillars, and its broader implications for the global economy of knowledge work. Unlike its predecessors, Claude Co-work utilizes a local-first, virtualization-based architecture that allows it to “take over” a user’s computer, performing complex, multi-step workflows asynchronously.

The significance of this release cannot be overstated. By moving AI from the browser tab to the operating system level, Anthropic has effectively commoditized the role of the digital executive assistant. Thesystem’ss ability to orchestrate parallel tasks, manipulate local files via the Apple VZ Virtual Machine framework, and interface with arbitrary web applications fundamentally reconfigures the unit economics of administrative labor.2 With a pricing strategy that aggressively targeted the mass market, dropping from an exclusive $100/month “Max” tier to a $20/month “Pro” tier within days of launch, Claude Co-work represents the first mass-market deployable “AI Employee”.

This analysis breaks down the nine “insane” features identified by early power users, dissecting the technical mechanisms behind them (such as the Model Context Protocol and FFmpeg integration) and evaluating their impact on enterprise productivity.

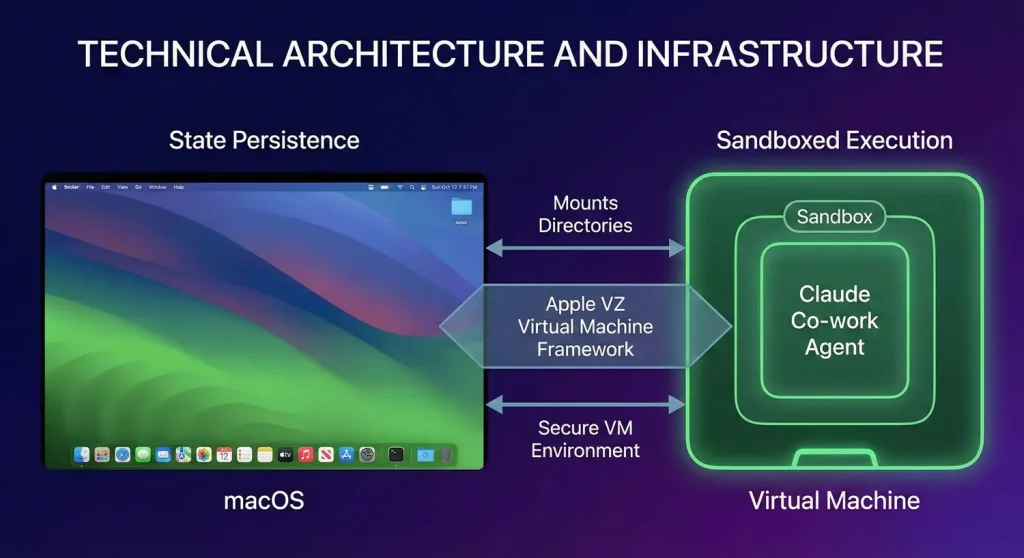

To understand the capabilities of Claude Co-work, one must first understand the radical architectural departure it represents from standard cloud-based LLMs. The transition from a chatbot to a “co-worker” required solving two critical problems: State Persistence and Sandboxed Execution.

The most distinct technical characteristic of the initial Claude Co-work release is its reliance on the Apple VZ Virtual Machine Framework.1 Unlike a standard desktop application that runs with the user’s permissions (and thus poses a risk of accidental mass deletion or privacy breaches), Claude Co-work instantiates a lightweight, hardware-accelerated virtual machine (VM) on the host macOS device.

This architecture provides a “Sandbox” environment. When a user grants Claude access to a folder, say, the “Desktop” or “Downloads”, the system does not merely give the LLM read/write permissions to the raw file system. Instead, it mounts these directories into the secure VM environment.

Claude Co-work operates on a hybrid topology that balances privacy with intelligence.

A critical innovation in Co-work is the concept of the “Long-Running Task” or persistent state. In a chat interface, the “state” is the conversation history. In Co-work, the state is the file system and the active browser session. The agent maintains a “mental model” of the project directory. If a user adds a file to a watched folder, Claude perceives this change. This persistence enables Scheduled Automation (Feature 9), allowing the system to wake up, execute a task, and return to sleep without human initiation, effectively behaving like a system daemon rather than a user application.

The transcript provided outlines nine specific features that define the Claude Co-work experience. These features are not merely incremental updates but represent distinct categories of automated labor. Below, we analyze each feature’s mechanism, utility, and implications.

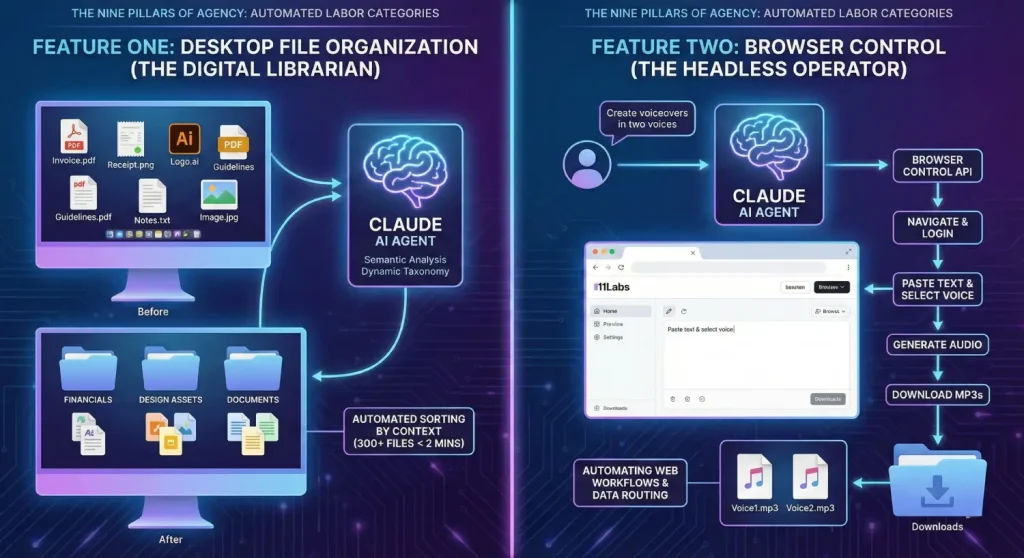

The Problem:

Digital hoarding is a pervasive issue in the modern enterprise. Users accumulate thousands of files in unstructured directories (Desktop, Downloads), leading to significant cognitive load and search latency. Manual organization is high-friction and low-value work.

The Solution:

Feature One allows the user to simply command, “Organize my desktop“.

Implication:

This feature demonstrates the power of Semantic File Systems. It decouples the organization from the user’s memory, effectively treating the file system as a database that the AI manages. It is the first step in “Abstracting the Filesystem,” where users may eventually stop managing folders manually entirely, relying on the agent to retrieve assets via natural language.

The Problem:

The web is fragmented. Disparate tools (e.g., 11 Labs for audio, Canva for design, ChatGPT for text) require users to act as “human routers,” copy-pasting data between tabs. APIs exist but are often inaccessible to non-technical users.

The Solution:

Claude Co-work introduces “Browser Control” enabling the agent to drive a web browser directly. The transcript illustrates this with 11 Labs, a voice synthesis platform.

Technical Insight: This capability relies on Computer Use vision models (likely Claude 3.5 Sonnet’s computer use beta capabilities).6 The model “sees” the webpage screenshots, identifies UI elements (buttons, text fields) by their visual coordinates or DOM tags, and simulates mouse clicks and keystrokes. This allows automation of any website, regardless of whether it has a public API, bypassing the “walled garden” problem of modern SaaS.

The Problem:

Human cognition is serial. A knowledge worker can generally focus on only one complex task at a time. Previous AI tools mirrored this, forcing users to wait for one prompt to finish before sending another.

The Solution:

Co-work introduces asynchronous parallelism. A user can queue multiple disparate tasks: “Organize files” “Create a presentation,” and “Update the expense spreadsheet”, and the system executes them simultaneously.

Implication:

This feature shifts the user’s role from “Creator” to “Manager.” The user becomes an orchestrator of a digital workforce. The efficiency gains are non-linear; a user can trigger five 10-minute tasks and walk away, achieving 50 minutes of labor in the time it takes to issue the commands. This effectively breaks the 1-to-1 relationship between human time and output.

The Problem:

Creating slide decks is often an exercise in formatting rather than ideation. Ensuring brand consistency (fonts, colors, logos) requires meticulous manual adjustment.

The Solution:

Claude Co-work automates the end-to-end creation of presentations using local assets.

Implication:

This commoditizes the “junior consultant” role. The ability to generate a 6-slide, on-brand deck from a one-sentence prompt drastically reduces the barrier to producing professional corporate collateral.

The Problem:

Business Intelligence (BI) often requires a complex “Extract, Transform, Load” (ETL) process: exporting CSVs from a platform like Shopify, cleaning them in Excel, and building charts.

The Solution:

Claude Co-work acts as an automated analyst. The example given is analyzing “monthly sales trends on Shopify”.

Technical Insight: This feature likely leverages Python execution within the sandbox (similar to the “Analysis” tools in other models) to perform statistical regression and chart plotting (using libraries like Matplotlib or Pandas), then compiles the results into a readable format (PDF or HTML dashboard). It democratizes data science, allowing non-technical shop owners to ask complex questions of their data (“Where are we declining?”) without needing SQL skills.

The Problem:

Video editing is technically demanding and time-consuming. Repurposing long-form content (podcasts) into short-form clips (TikToks) involves watching hours of footage to find “moments.”

The Solution:

Feature Six is perhaps the most advanced: programmatic video editing.

Implication:

This is a massive leap for the “Creator Economy.” It automates the “content mill” workflow. While it may not replace the artistic nuance of a human editor for a documentary, it is perfectly sufficient for the high-volume, low-latency requirements of social media content.

The Problem:

Email and Calendar management are distinct from file management, usually requiring separate tabs and context switching.

The Solution: Co-work integrates directly with Google Workspace (Calendar, Gmail, Drive).

Implication:

This feature centralizes the “Control Center” of the user’s professional life. By treating emails and calendar events as just another data type (alongside files and browser tabs), Claude becomes a unified interface for all administrative interaction.

The Innovation: This feature is technically the most significant for the platform’s longevity. The Model Context Protocol (MCP) is an open standard introduced by Anthropic.

The Mechanism:

MCP acts like a “USB-C port for AI.” It standardizes how the AI connects to external data and tools.

Strategic Value: Feature Eight transforms Claude from a tool into a Platform. It allows enterprises to build custom MCP servers for their proprietary internal tools (e.g., a legacy inventory database), allowing Claude to interact with them securely. This ecosystem play is Anthropic’s strategy to build a “moat” against competitors.

The Problem:

Most AI interactions are reactive. The user must be present to initiate the prompt.

The Solution:

Scheduled Automation turns Claude into a proactive agent.

Implication:

This creates the “24/7 Employee” dynamic. The agent works while the user sleeps. It allows for consistent content cadence and reporting without constant human vigilance, effectively automating the “maintenance” aspects of a job.

To fully appreciate the “Custom MCP Server” feature, we must analyze the Model Context Protocol in greater detail. Released in late 2024 and matured by 2026, MCP is the backbone of the agentic economy.

MCP functions on a Client-Host-Server topology:

This architecture abstracts the complexity of APIs. The LLM does not need to know the specific REST API endpoints of Asana; it only needs to know the “Tools” exposed by the Asana MCP Server (e.g., create_task, list_projects). The Server handles the authentication and API calls.

Just as USB-C allows a hard drive, monitor, or charger to plug into the same port, MCP allows a database, a code repository, or a Slack channel to “plug into” the AI context. This standardization is crucial for the Video Editing feature (Feature 6). Claude does not natively know how to edit video bytes. However, by connecting to an FFmpeg MCP Server, it gains a “Tool” called trim_video(start_time, end_time). The LLM simply calls this tool with the parameters derived from its transcript analysis, and the MCP Server executes the complex FFmpeg command line operation.

The “School Community” mentioned in the transcript highlights a growing secondary market of MCP developers. Users are sharing “step-by-step instructions” and likely custom MCP server configurations. This crowdsourced development accelerates the capabilities of the platform far faster than Anthropic could achieve alone. We are seeing the emergence of “MCP Marketplaces” where specialized skills (e.g., “Real Estate Data Analyzer MCP”) are traded or sold.

The release of Claude Co-work at a widely accessible price point ($20/month for Pro users) acts as a massive deflationary force on the market for digital administrative labor.1

The transcript explicitly compares the tool to a “personal AI employee”. This comparison is economically grounded.

While the AI lacks the soft skills and judgment of a human (e.g., handling a sensitive client call), it vastly outperforms humans in the mechanistic tasks of file sorting, data entry, and basic research. This suggests a bifurcation in the VA market: low-level repetitive tasks will be completely automated, forcing human VAs to move up the value chain to high-touch relationship management.

Anthropic’s pricing move, dropping the feature from the $100 “Max” tier to the $20 “Pro” tier within days, signals an aggressive play for dominance.

The transcript mentions a “free school community” for instructions. This reflects a critical barrier to entry: Prompt Literacy. Using an agent requires a different skill set than using a chatbot. Users must learn to define workflows, set permissions, and configure MCP servers. The rise of these communities indicates that “AI Operations” is becoming a distinct skill set. Just as “Excel Skills” became a requirement for office work in the 90s, “Agent Orchestration” is becoming the prerequisite for the late 2020s.

Despite the “insane” capabilities, the report must address the inherent risks of granting an AI control over a local operating system.

Feature One involves the agent moving and potentially deleting files. While the Sandbox (Section 2.1) prevents system-level damage, it does not prevent user-level data loss (e.g., accidentally deleting a “Drafts” folder that the AI deemed “clutter”).

Connecting the agent to Gmail and Calendar (Feature 7) involves processing highly sensitive personal data. While Anthropic emphasizes their “Constitution” and privacy policies, the existence of a “Headless Browser” (Feature 2) that can log into bank accounts or medical portals (if directed) presents a massive surface area for misuse, either by the user or by malicious prompt injection (e.g., a “poisoned” PDF that instructs the agent to exfiltrate data when organized).

Early reviews of the “Research Preview” note that web connectors can be “flaky” and that the agent can sometimes get stuck in loops.17 Unlike a human who knows when a website is broken, an agent might endlessly retry a failed click unless programmed with robust error handling. The “Scheduled Automation” feature (Feature 9) is particularly risky here, if an automated post fails or hallucinates offensive content while the user is asleep, the reputational damage is real.

The release of Claude Co-work marks the end of the “Chatbot Era” and the beginning of the “Agentic Era.” By integrating the nine features analyzed above, ranging from local file manipulation to browser automation and custom protocol extensibility, Anthropic has created a system that does not just simulate conversation, but simulates work.

The shift is technical, architectural, and economic.

For the user, the promise of Claude Co-work is the reclamation of time. It offers a future where the computer manages itself, where data collects itself, and where the “work about work” is delegated to silicon, leaving the human free to focus on the creative and strategic endeavors that, for now, remain solely our domain.

Hassan Tahir wrote this article, drawing on his experience to clarify WordPress concepts and enhance developer understanding. Through his work, he aims to help both beginners and professionals refine their skills and tackle WordPress projects with greater confidence.