- Lifetime Solutions

VPS SSD:

Lifetime Hosting:

- VPS Locations

- Managed Services

- Support

- WP Plugins

- Concept

The artificial intelligence hype has provoked a disturbing similarity: is the current AI boom comparable to the hypothetical mania of the dot-com boom in the late 1990s? Although cynical commentators note how remarkably alike investor conduct and appraisals are, the question is even more intricate when the present-day contest is not only commercial in character but also a worldwide race to secure superintelligent AI, which is not a qualitatively comparable risk. This critical test will come in the period between 2026 and 2030, when infrastructure investments will either pay in proportion or become historical cautionary stories.

A bubble arises when the expectations are superior to the paths of profits. Using this definition, AI has classic bubble attributes, including skyrocketing asset prices, industry concentration and overpricing that is out of touch with profitability in the near term. However, the technology behind this is provably paradigm-changing, with the result being a bubble and a bubble and a bubble and a legitimate supercycle at the same time. Worse, because the development of superintelligent AI opens up geopolitical layers the world has never experienced with prior technology bubbles, the latter can further increase the volatility and systemic risk of markets.

In October 2025,54% of fund managers see bubble conditions, a dramatic shift from September.Sundar Pichai acknowledged elements of irrationality in the AI investment boom, while warning that all businesses would suffer if the bubble collapsed.

Jared Bernstein warned of a third bubble on CNBC, citing mathematical reality:OpenAI committed to $1.4 trillion spending (including $500 billion Stargate) yet projects only $13 billion revenue, a 108-to-1 ratio.Goldman Sachs projects $527 billion capex in 2026 alone, withMcKinsey estimating $5.2 trillion capex by 2030.AI captured 53-58% venture capital in 2025, an unprecedented concentration.

What is unique to the current AI boom compared to earlier tech bubbles is the explicit aim of artificial superintelligence (ASI). These AI systems have cognitive abilities that are greater than human intelligence in every aspect. This endeavor has drawn the government’s interest and capital to unprecedented levels.The Trump administration announced $500 billion AI infrastructure, withEnergy Secretary Chris Wright calling it “Manhattan Project.” China simultaneously pursues aggressive AI development, creating a geopolitical race over digital governance.

Yet this analogy has some dangerous flaws. Schmidt, Wang and Hendrycks warn against the Manhattan Project model, as a failure to race to ASI puts us in higher failure conditions. The “Manhattan Trap” is a warning that competition leads to mistrust, which on one hand undermines safety and on the other democratic norms. This changes the bubble from one of pure finance to one of geopolitical destabilization risk.

However, this is one of the key differences between present-day euphoria and dot-com fantasy. Given OpenAI Stargate: 7 gigawatts proposed, a reasonable calculation of foundational infrastructure, the result is around $57 billion per gigawatt.

The depreciation-revenue gap demands scrutiny.Tower Bridge found $40 billion annual depreciation versus $20-40 billion revenue.To break even on 2025 capex, the AI industry requires $160 billion annual revenue, a tenfold increase. Yet less than 10% of enterprise AI pilots show profitability.

In contrast to the dot-com age, the current AI leaders do not lose their profitability, but they make substantial income. NVIDIA, Microsoft, Google and Amazon were already established, profitable companies. NVIDIA estimated close to 120 billion in revenue through the fiscal 2025, whereas OpenAI recorded 4.3 billion in the first half of fiscal 2025 revenue.

The U.S. AI market generated $41 billion in 2025 revenue, with 70% of American businesses using AI. ChatGPT reached 800 million users and20 million paid subscribers, representing broad adoption, sharply different from Pets.com.

Experts expect superhuman coding AI by March 2027, making R&D approximately 4-5 times faster. A superhuman AI researcher might arrive by 2027, creating a feedback loop where AI acceleration compounds exponentially. This timeline compression is itself a bubble risk, companies are now racing toward superintelligence before demonstrating sustainable commercial models for current systems.

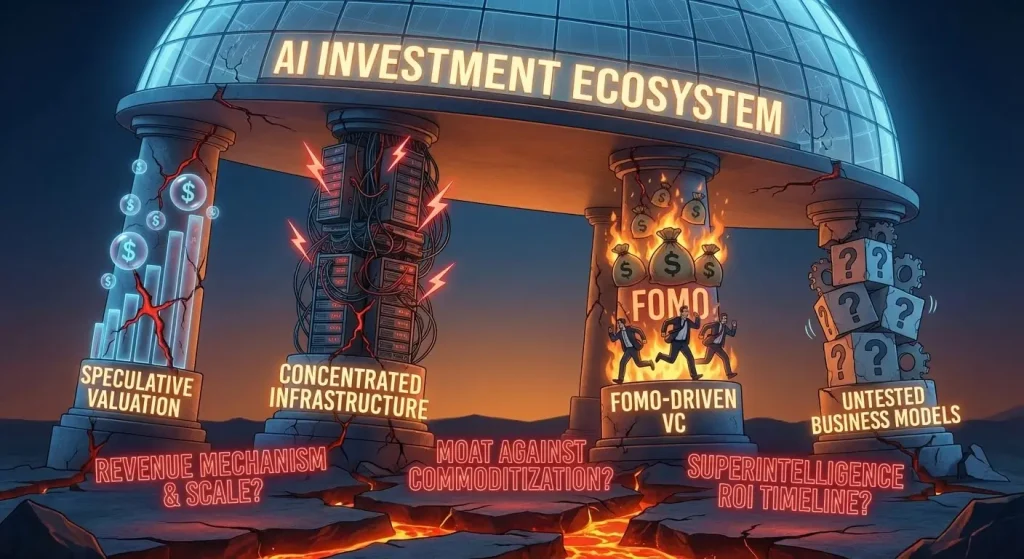

Current AI investment rests on four interconnected pillars: speculative valuation, concentrated compute infrastructure, FOMO-driven venture capital, and untested business models. A crack in one threatens the entire ecosystem. Over $300 billion in venture capital has been injected into AI in the last 24 months, driving median seed-stage valuations for GenAI startups up fourfold, a classic speculative indicator.

Investors and corporate leaders must insist on addressing three questions clearly before spending capital on AI-oriented companies:

The parallels between current AI exuberance and dot-com mania are genuine and deserving of serious concern. Yet the superintelligence dimension introduces a qualitatively new hazard: geopolitical competition that may force continued acceleration regardless of commercial fundamentals. The “Manhattan Trap” warns that race mentality undermines safety, creating conditions where participants continue investing even when returns disappear.

The period from 2026 to 2030 represents the crucial testing ground. Companies demonstrating measurable productivity improvements and robust adoption will likely survive. Those making vague claims about AI transformation will face corrections. But unlike previous bubbles, the superintelligence race may prevent rational correction, governments and competitors may continue funding regardless of profitability to avoid falling behind. This transforms the bubble from a purely financial correction risk into a geopolitical destabilization risk, making the stakes higher and the potential damage more severe than any previous technology bubble.

Netanel Siboni is a technology leader specializing in AI, cloud, and virtualization. As the founder of Voxfor, he has guided hundreds of projects in hosting, SaaS, and e-commerce with proven results. Connect with Netanel Siboni on LinkedIn to learn more or collaborate on future project.