- Lifetime Solutions

VPS SSD:

Lifetime Hosting:

- VPS Locations

- Managed Services

- Support

- WP Plugins

- Concept

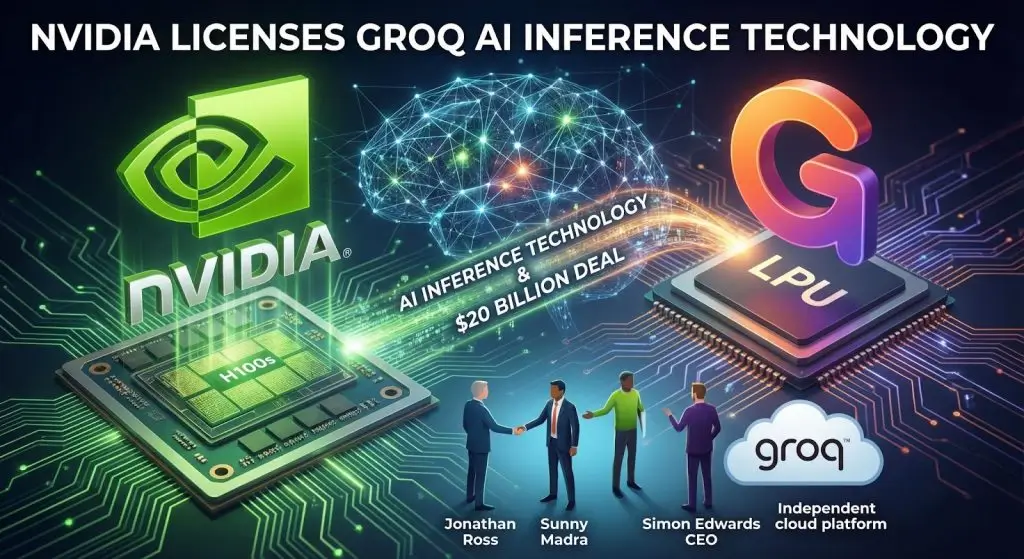

Nvidia signed a non-exclusive licensing deal with AI chip startup Groq on its inference technology. It brought on founder and CEO Jonathan Ross, President Sunny Madra, and other engineering executives to develop the technology into a component of the Nvidia AI platform.

The structure, which the two companies verified on Wednesday, is not a complete acquisition even though it was being reported that the company was acquiring the other at a price of 20billion dollars. Groq is going to remain a separate company with a new management in place, as the new CEO is CFO Simon Edwards. The GroqCloud inference service of the company will not be stopped.

Under the agreement announced December 24, Nvidia gains access to Groq inference technology while adding the startup’s core leadership team. Jonathan Ross, who previously helped establish Google AI chip program before founding Groq in 2016, will join Nvidia along with President Sunny Madra and senior engineers.

Groq current CFO, Simon Edwards, will assume the role of CEO of the remaining independent entity. Previously, Edwards was CFO at Conga and ServiceMax, which was acquired by PTC in 2023. His appointment will provide longevity since Groq will keep creating and selling its Language Processing Unit (LPU) technology via its cloud platform.

The latest valuation of Groq is 6.9 billion dollars after a series D round of 750 million dollars in September 2025, which represents over two times the 2.8 billion-dollar valuation of the company thirteen months prior. The initial coverage of the 20 billion seems to represent the possible value of assets as opposed to the consideration of the transaction. In both cases, the individual financial aspects of the licensing agreement were not revealed by either companies.

Groq has developed the Language Processing Unit (LPU), a chip architecture designed specifically for AI inference, the deployment and execution of trained models, rather than the training process itself. The company uses an SRAM-exclusive structure, in which there are no off-the-shelf high-bandwidth memory reliances, and which is specialized in low-latency, real-time programs.

According to third-party benchmarks by ArtificialAnalysis.ai, Groq LPU achieved throughput of 241 tokens per second running Meta’s Llama 2-70B model, more than double the speed of competing cloud providers. Under special conditions, there is an internal test speed of over 300 tokens per second recorded by Groq. The company states that its deterministic architecture has stable response times and time-to-first-token values up to 0.22 seconds.

Jensen Huan,g CEO of Nvidia, said in an email to employees: We will leverage the Groq low-latency processors into the NVIDIA AI factory architecture and expand the platform to handle a more diverse set of AI inference and real-time workloads. Huang stressed that, although Nvidia is bringing on board a number of talented workers and licensing the technology of Groq, it is not purchasing Groq as an organization.

Nvidia CFO Colette Kress declined to comment on specific deal terms. The licensing arrangement allows Nvidia to strengthen its inference capabilities while Groq maintains its independent market presence.

The accord underscores the increase in specialization in AI infrastructure. Although Nvidia has dominated the training chip market with its GPUs, inference workloads need different architecture solutions based on low-latency and real-time execution.

Making Groq a licensed company instead of acquiring it directly provides Nvidia with access to specialized technology and talent, while Groq remains a competitor in the inference space. This plan implies that Nvidia does not regard specialized inference architectures as a threat to its hegemony.

In the case of enterprise customers, the deal broadens the choice with respect to high-performance inference. Organizations have entry points to the technology of Groq by using its standalone GroqCloud service or, in the future, as a part of the wider CUDA ecosystem of Nvidia. The architecture also confirms the transition to heterogeneous computing architectures made in AI workloads beyond the traditional GPUs.

Netanel Siboni is a technology leader specializing in AI, cloud, and virtualization. As the founder of Voxfor, he has guided hundreds of projects in hosting, SaaS, and e-commerce with proven results. Connect with Netanel Siboni on LinkedIn to learn more or collaborate on future project.